High Throughput

Approaching the theoretical bandwidth of Gigabit Ethernet, using modest CPUs

RTI is the world’s largest DDS supplier and Connext is the most trusted real-time data streaming platform for intelligent physical systems.

|

From downloads to Hello World, we've got you covered. Find all of the tutorials, documentation, peer conversations and inspiration you need to get started using Connext today.

RTI provides a broad range of technical and high-level resources designed to assist in understanding industry applications, the RTI Connext product line and its underlying data-centric technology.

The monthly RTI Newsletter lets you in on what’s happening across all the industries that matter to RTI customers.

RTI is the real-time data streaming company for autonomy. RTI Connext supplies the reliability, security and performance essential for intelligent physical systems.

RTI Connext provides a significant performance advantage over most other messaging and integration middleware on every supported platform.

Approaching the theoretical bandwidth of Gigabit Ethernet, using modest CPUs

Increases linearly with data payload size

Sustains low latency and throughput even at high levels of message traffic

RTI benchmarked Connext with a wide variety of latency and throughput tests using RTI Performance Test (PerfTest). It includes scripts to run the tests on different platforms.

These results show that Connext provides sub-millisecond latency that scales linearly with data payload size and throughput that easily exceeds 90% of line rate over gigabit Ethernet.

The PerfTest benchmarking tool is completely free, along with documentation and a video tutorial.

To run these benchmarks on your own hardware, please download or contact your local RTI representative:

.png?width=803&height=441&name=bokeh_plot%20(1).png)

This graph shows the one-way latency without load between a Publisher and a Subscriber running in two Linux nodes in a 10Gbps network.

%20copy.png?width=803&height=441&name=bokeh_plot%20(1)%20copy.png)

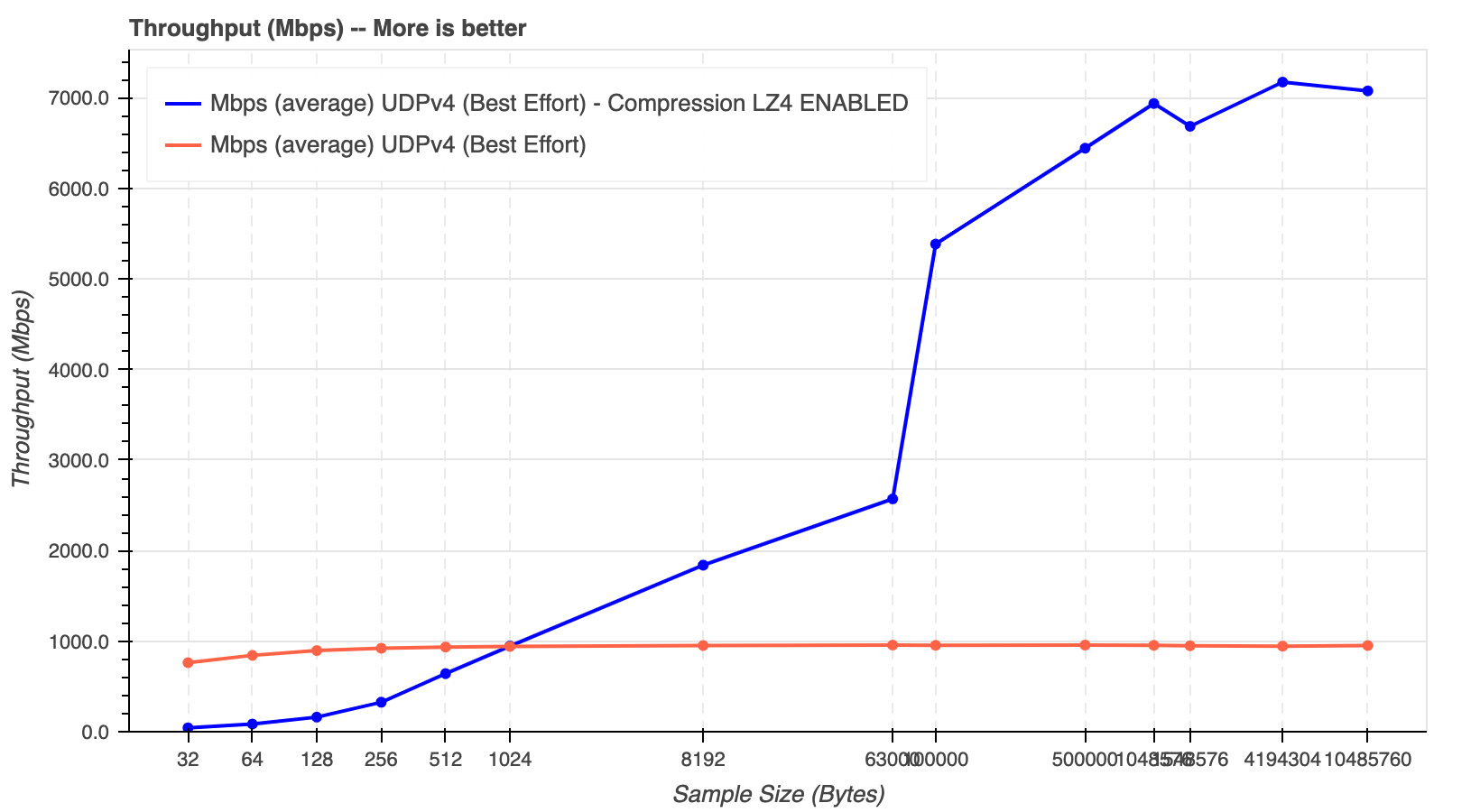

This graph shows the expected throughput behavior when performing a 1-1 communication between two Linux nodes in a 10Gbps network.

This graph shows the expected throughput behavior when using data compression in a 1-1 communication scenario between two Linux nodes in a 1Gbps network.

Andrew Saba:

Neil Puthuff & Nick Mishkin:

Neil Puthuff: